I’ve hesitated to really talk much about AI, mostly because I’ve been on the fence about whether I think it’s got the potential to save us or destroy us, and depending on who and what you read, it seems like it’s 50/50 either way. It’s just too soon to tell and I think these technologies are being adopted so quickly that we haven’t given ourselves enough time to think about the ramifications or the potential. Instead, everyone is rushing to become an armchair expert so they can monetize it into oblivion before they even understand it. Honestly, a lot of the talk around AI feels like a YouTube short that promises to teach you how to make thousands of dollars a month from affiliate marketing and drop shipping. Still, some of the image generation tools are interesting and I’ve been wondering whether or not there’s a place for AI in my creative workflow. I know there are a ton of legal and even moral and ethical questions that I still need to sort out for myself, but for the purposes of this Iteration, I’m going to put those aside and just focus on the tools themselves.

A month or so ago, I tried an experiment and used Stable Diffusion to generate some ideas for a product that I’m working on developing for a new personal project. I had already done several very rough sketches, but I decided to see what Stable Diffusion could produce. I described the details of the product in the prompt field and tapped the “Generate Image” button. While the results weren’t spectacular or even usable as-is, the images it did generate contained a few design elements that I hadn’t considered and actually sent me back to the drawing table to iterate on and refine into something I could potentially use. And I know that this is only one example, but it was enough to show me that as a solo creative without an actual design team, I can absolutely see the value in using AI tools as an addition to the ideation or prototyping phase of creating new products.

What about photography? Honestly, this is where it gets a little muddy for me. On one hand, I’ve seen some really incredible images that were created using tools like MidJourney and Stable Diffusion, but to me—and I can’t stress this enough that this is just for me—it’s not photography. It’s impressive and it might even be photorealistic, but I still can’t call it photography. I’ve been taking pictures with cameras for nearly 50 years, and aside from a handful of exceptions, every photograph I’ve taken has required light to travel through an aperture of some sort—either a lens or a pinhole—before striking a light-sensitive surface that records the image that the camera “saw.” And the camera can be analog or digital or a Quaker oatmeal box, which is what we used in my high school photo class. An exception to that would be photograms or cyanotypes, which don’t require a camera but still do require light. So for me, no light, no photograph. It’s that simple.

However, and this is the muddy bit, recent conversations with a couple photographer friends talking about how they use AI has me wondering whether there’s room in my workflow for using it as a post-processing tool to modify existing photographs rather than creating images from scratch. For example, one friend used Photoshop’s new Generative Fill to re-crop photos from one aspect ratio to another and to fill in the missing background data. And I’ve got to tell you, the results are pretty seamless. If he hadn’t told me, I never would have known or even suspected that the images weren’t out of camera.

The other friend I was talking to has been experimenting with using AI alongside his own photographs to create sort of hybrid digital composites, for lack of a better description. They aren’t composites in the traditional Photoshop multi-layer sense because the images are generated entirely by MidJourney. He imports his own photographs to use as the starting points and then writes custom prompts to generate the missing elements. Just as with the first friend, the results are pretty seamless, and they’re just going to get better. Keep in mind that the first version of MidJourney only came out about a year and a half ago and the differences in the results between it and the current 5.2 version released in June are staggering. That said, from what little I’ve seen on the actual prompt creation side, you still don’t have a ton of control over the particulars of the output. It’s certainly getting better, but at the moment you are definitely limited, which may be fine for mood boards and personal work, but I just can’t see clients being okay with not being able to make specific tweaks and changes.

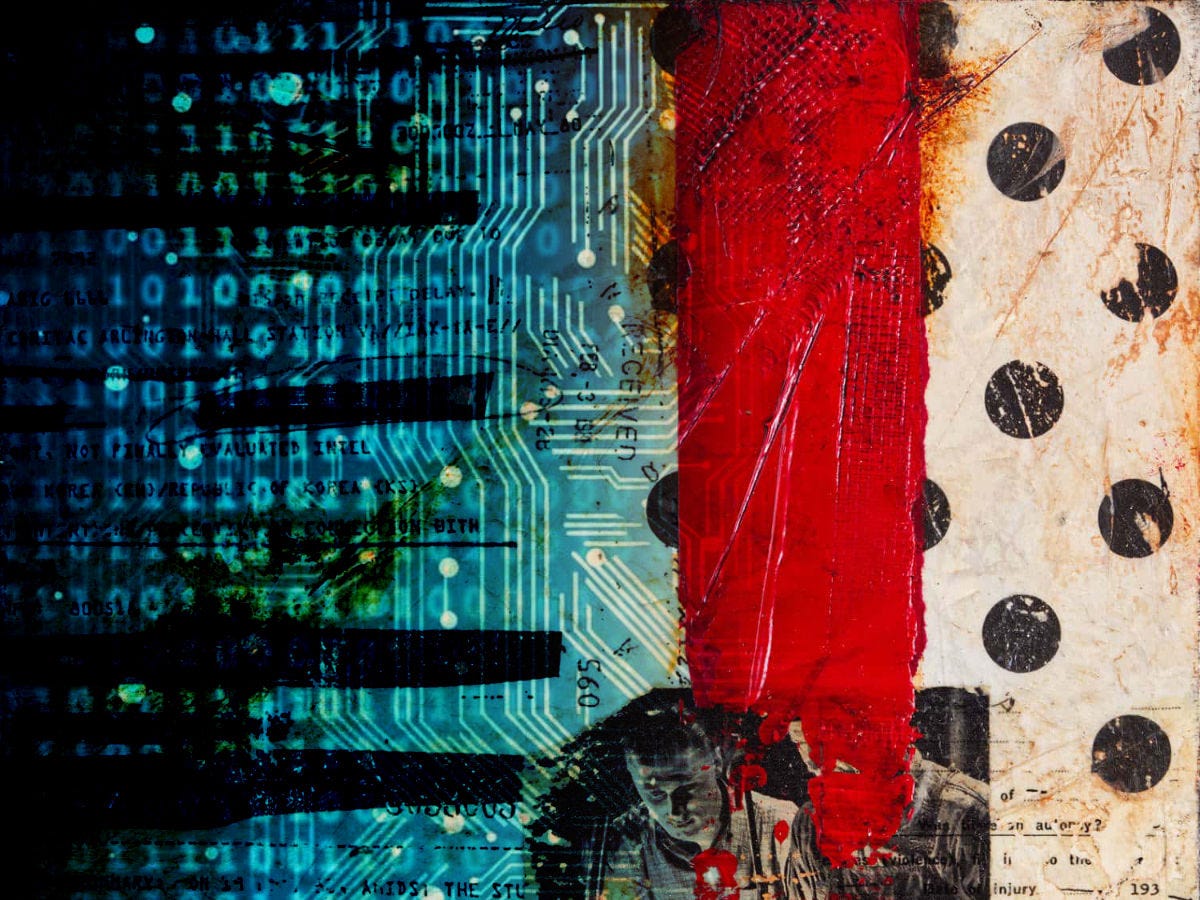

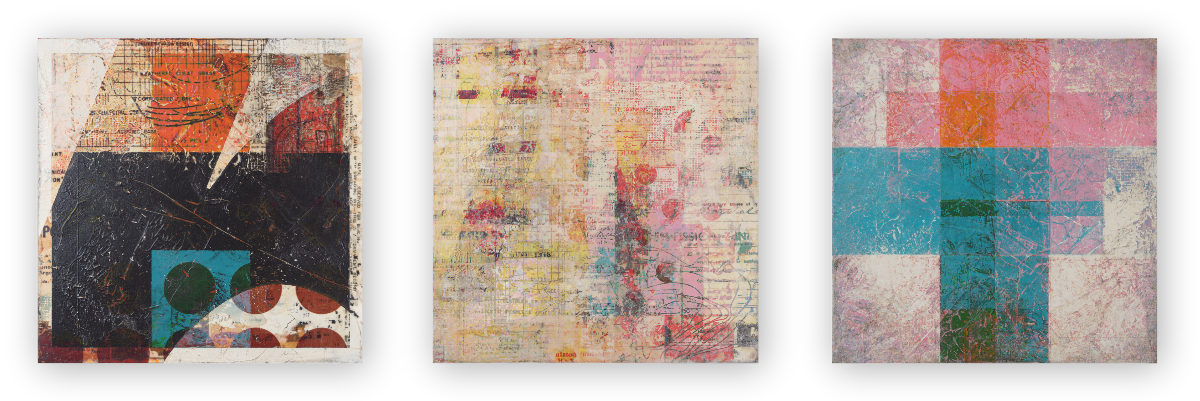

I don’t see myself using AI to create images as much as I can see using it to modify images—either paintings or photography—that I’ve already made, at least for now. However, the idea of using Generative Expand in Photoshop to change the aspect ratio of a painting seems interesting if it works. I’ve made a few square paintings that I would actually love to see as 4:3 or even 16:9, and I think that since so much of my work is abstract, the results could work pretty well. I don’t even know whether this is possible, but I would love to be able to “train” MidJourney on several pieces of my own work in order to digitally “remix” it into something new. Then again, even if something like that is possible, I don’t think it would offer me the level of control over the results that I expect and have grown accustomed to. I’ve started doing something similar in Affinity Photo by importing stacks of different paintings and cycling through the various blend modes on individual layers. The results are interesting and the advantage there is being able to add or subtract or mask elements and layers to create a specific look or effect. Much of MidJourney seems to be “you get what you get,” but to be fair, maybe that’s completely inaccurate—I don’t really have enough experience with it to know what is and isn’t possible.

I have no idea where any of this is going and I don’t mind being transparent around what I do and don’t know about it. My guess is that there are quite a few of you in a similar spot trying to figure this stuff out. Whether it’s here or on my website, I’ll start sharing my own experiments in the hope that they can offer some ideas and maybe provide a bit of clarity around if and how AI might fit into a non-AI creative workflow.

Thanks so much for reading.

QUESTIONS

Are you currently using AI in your workflow? If so, how?

Hit reply, leave a comment, or email me at talkback@jefferysaddoris.com.

I'll pass on AI for my own practice, though I admit I've used it for my day job, to generate ideas for events and communications. I predominantly use historical and alternative processes and creating camera-less images, so it makes no sense to me to use AI to create an ersatz version of a 150+ year old process. Hard pass. Besides, I like getting my hands dirty and having a physical thing in my hand that I've created. Yeah, I know AI images can be printed, but it just wouldn't feel the same.

I'm also still struggling with embedding AI in my photography. Was working on a personal project where I combined my own images with AI generated ones, based on extensive prompts, but to be honest, I kinda lost my interest after a while. Think it might be useful in some ways, but the lack of control as you mentioned draws me back. It is only the beginning so will be way better in the near future. Exciting times.